In the rapidly evolving world of AI and large language models (LLMs), engineers and creators constantly seek methods to push model performance beyond surface-level responses. One emerging approach is Generated Knowledge Prompting — a technique that asks the model not only to answer a question, but first to generate relevant knowledge or context that supports or informs the answer. This makes the response more accurate, nuanced, and explanation-rich.

In this article, we’ll cover what Generated Knowledge Prompting is, why it matters, how it works, when to use it, and a concrete example you can try today.

What Is Generated Knowledge Prompting?

Generated Knowledge Prompting is a technique where you ask the LLM to produce intermediate knowledge or facts before asking it to solve a task or answer a question. In other words:

- First: “Generate knowledge relevant to this problem.”

- Then: “Use that knowledge to answer the main question.”

The “knowledge” generated may include definitions, background information, comparisons, factual context, assumptions, or reasoning steps. This knowledge becomes part of the prompt and improves the final answer’s quality.

Why Generated Knowledge Prompting Matters

- Improved Accuracy – By asking the model to self-generate supporting facts, you reduce the risk of it missing key context or relying on incomplete knowledge.

- Better Reasoning – The model is forced to think through “What do I know?” before “What do I conclude?”

- Reduced Hallucination – Since the model first lays out facts, it is more likely to ground its subsequent answer.

- Enhanced Transparency – The intermediate knowledge provides visibility into the model’s thinking.

- Scalability – With high-capacity models in 2026, generated knowledge enables complex tasks (e.g., domain-specific reasoning) without building large external databases.

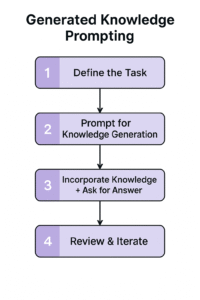

How Generated Knowledge Prompting Works — Step by Step

Here’s a simplified workflow:

- Define the Task

– Example: “Is it correct that ‘Playing golf means getting a higher total score than your opponent’?” - Prompt for Knowledge Generation

– Ask: “Generate relevant knowledge/facts about golf scoring.”

– The model outputs: “In golf, the objective is to complete each hole in as few strokes as possible. A round typically consists of 18 holes…” - Incorporate Knowledge + Ask for Answer

– Then ask: “Using the knowledge above, answer the original question and provide explanation.”

– The model then refers to the knowledge before providing the answer. - Review & Iterate

– Check if the generated knowledge is relevant and accurate.

– Refine prompt if needed or add constraints (e.g., “List 3 facts and cite sources”).

When to Use Generated Knowledge Prompting

This approach is especially useful when:

- The domain is specialized or technical (legal, medical, scientific, financial).

- The question requires background context for a correct answer.

- You’re dealing with ambiguous or complex statements (common-sense reasoning, contrary facts).

- You want explainable AI outputs (for audits, compliance).

- You need structured reasoning and transparency in the process.

Example of Generated Knowledge Prompting

Task: Assess this statement: “A fish cannot think.”

Step 1 – Knowledge Generation Prompt:

“Generate 3 relevant knowledge facts about fish cognition and brain structure.”

Expected output (knowledge):

- Fish have long-term memory and can navigate mazes, showing evidence of learning.

- Some fish species form complex social relationships and show behavioral flexibility.

- The fish brain has regions analogous to higher vertebrates that support decision-making and spatial memory.

Step 2 – Answering Prompt (incorporating knowledge):

“Using the knowledge above, evaluate the statement: ‘A fish cannot think.’ Provide your reasoning and final verdict.”

Expected result:

The model uses the knowledge to argue that fish exhibit many traits of thinking—thus the statement is incorrect—and then summarises reasons.

Best Practices for Generated Knowledge Prompting

- Be explicit: Ask the model exactly how many knowledge items you want (e.g., “List 4 key facts”).

- Specify format: Clearly instruct how to format the knowledge (bullets, numbered list).

- Guard quality: Review the generated knowledge for accuracy and bias.

- Use constraints: “Do not invent facts”, “base only on known science”.

- Chain prompts: Generate knowledge, then reuse it in your final prompt.

- Tailor to context: For domain-specific tasks, consider attaching relevant dataset summaries or external references.

Limitations and Considerations

- Models may still generate incorrect or misleading “knowledge” — always validate for high-stakes tasks.

- The method uses more tokens (knowledge generation step plus answer step) → cost increases.

- For tasks with well-structured data sources, simple retrieval might work better.

- If the knowledge domain is ultra-specialised, external validation or human oversight remains crucial.

The Future of Generated Knowledge Prompting

In 2026 and beyond, as LLMs become more capable and multimodal, generated knowledge prompting is likely to integrate with:

- Autonomous agents that self-generate context before acting.

- Hybrid systems combining retrieval + generation for richer knowledge.

- Explainable AI workflows, where reasoning with generated knowledge becomes audit-ready.

- Domain-specific prompting frameworks where knowledge generation is automated as the first stage.

Mastering this technique will help AI practitioners, content creators, analysts, HR specialists, and tech teams elevate model outputs to professional-grade quality.

Generated Knowledge Prompting is a sophisticated yet practical technique for enhancing AI responses by adding a knowledge generation layer before the task itself. For any user wanting more accurate, transparent, and high-quality AI output in 2026, this approach is a game-changer.